A Puppet and a Robot Walk into a Moonshot Factory

How puppeteering with my parents led to a career in robotics

I like to tell people I was raised by a troupe of traveling puppeteers. This is technically true, but conjures an inaccurate picture. My parents are professional puppeteers — they perform in elementary schools, libraries, community centers, theaters and arts festivals — but we didn’t live out of a covered wagon. I grew up in a house in California and went to regular elementary school, but we also spent time touring the state as well occasionally going to more far flung places like Japan, Mexico, and Europe.

I first performed with my parents when I was two years old (with a 5 second bit-part as the sun in the eensy-weensy spider) and began performing “professionally” when I was five. I performed hundreds, maybe thousands, of times throughout my childhood in over a dozen shows and in 13 countries, and earned $5 a show which I spent on science fiction novels and Magic cards.

I’m the one in the middle

While puppetry was by childhood profession, high school is where I discovered what would become my later life passion and career: robots. When my best friend joined the F.I.R.S.T. robotics team at my high school, I did too — and after I graduated, it inspired me to spend the next few years getting two degrees in engineering and trying to be wherever the most exciting robotics was happening. That’s why today I’m at X’s Everyday Robot project where we are trying to bring robots into people’s everyday lives by letting them learn to succeed through practice.

The surprising similarities between puppets and robots

I’ve found my puppetry skills and techniques are surprisingly useful in robotics. For example, when you design a new puppet or robot, the first thing you have to decide is: how is it going to move? Where are the joints going to be? How will you control them?

This duck puppet has the same “degrees of freedom” as this robot

Likewise, once you figure out how it should move, you need to figure out how you are going to control it. For puppets you usually have to move the controls away from the joint, bringing them closer to you, the puppeteer. For robots, you often want to put the motors close to the joint to decrease floppiness.

Actuation is a big design constraint for both

My parents taught me everything I know about puppetry, so a few weeks back I invited them to give a workshop for teams at X about the similarities between puppetry and robotics.

The one big similarity between the two fields is that they both take a non-living object and make it seem alive. I’ll let you in on one of the secrets of puppetry: this is easy because humans have this incredibly powerful drive to interpret everything as though it were alive. We can’t help it. We attribute human emotion to our computers, our cars, our vacuum cleaners. When an object has a face and moves, we project thoughts and desires onto it. My parents and I perform in full view of the audience, holding the puppets in front of them. After our shows children would often ask us “How did the puppets move?” and “How did the characters talk?”

When we wear black no one can see us?

These children had watched a whole show where the puppets were moved by hand and voiced by puppeteers standing directly behind them, but the puppets were so real that they had forgotten the puppeteers. Their brains had edited us out.

For puppeteers, this tendency to anthropomorphize anything that moves is a powerful tool to let our audience believe in our characters — even if all we are doing is waving some painted foam around on a stick.

Painted-foam-on-a-stick in dog form.

For roboticists, this is a double-edged sword. It’s easy for people to attribute emotion and intent to our robots — which can be awkward when they have the emotional and situational intelligence of a graphing calculator. Their ability to read the room is really, really lacking. So, oftentimes we are trying to use the language of puppetry to convey non-intelligence, or at least help set expectations that our robot isn’t going to be able to handle any of the curveballs you might throw at it. If the robot looks at you and says “Hello, how can I help you?” you might expect that it will understand any request, which is not where we are right now.

A robot failing to catch a curveball

This brings us to the big difference between puppets and robots. Puppets have the full force of a human intelligence behind them. And they get a script: we know everything they need to do ahead of time. But our robots are doing improvisation all the time, and without a human intelligence behind them, even simple things that humans take for granted are hard.

For example: eye contact is the most powerful tool for seeming alive and competent. Here we see a robot driving along, turning its “head” and looking at a person, and then continuing on its way.

“Oh, hello there.”

That ‘eye contact’ makes the robot seem more capable and social. (Does it encourage people to set their expectations too high, or does it make the robot more acceptable in the space? Who knows! This kind of robotics is all so new.)

With a puppet, you’d do this by deciding, with your super powerful brain, that this was a good time to look up at a person, and moving your hand to do it. For a skilled puppeteer this would probably be a nearly automatic action, done while concentrating on the dialog or walking motion.

Here’s what had to happen to make it work on a robot:

The robot has two depth sensors that it uses: a laser-radar (lidar) on the front that gives a wide 270 degree view of the world around it, and a depth camera in the head. The navigation code wants to use the head camera some of the time, to check the blind spots in the navigation plan. We don’t want to write special case “look at a person while driving” code into our navigation system, because there are lots of other things it might want to look at — so we had to add a way for other systems to request permission to use the head for a bit, and the navigation code has to be able to deal with losing permission to move the head temporarily.

We also have to be able to see humans — and because the field of view in the camera is pretty small, to be able to see humans out of the “corner of our eye” we need to be able to detect them from the lidar point cloud (doing this well required breakthroughs in the state of the art in machine learning), and then we need to track them, over time. Finally, we need someone to decide what the rules are: when to look at a person, how long to look, when to look away, and when it is ok to look back.

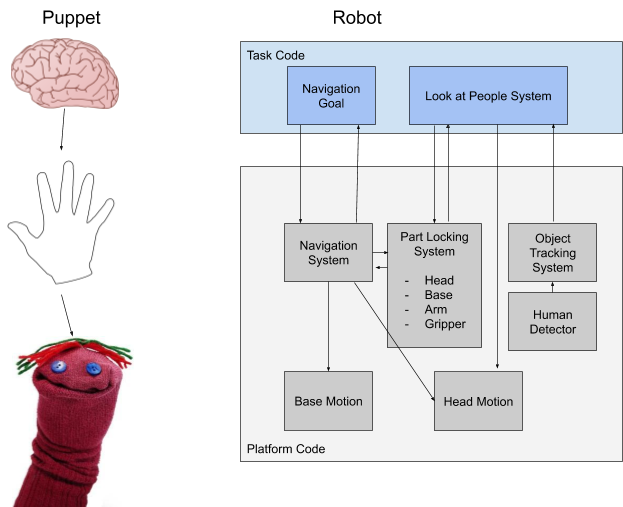

Because I am an engineer, here is a systems diagram.

As you can see, it’s significantly more complicated than a puppet. I’m not the first one to talk about this: there is even a well-known paradox describing how it’s easy to create computers that beat the pants off us humans at checkers, but giving them the dexterity of a one-year-old is really hard. This is surprising to people, because checkers feels really difficult to us while opening doors feels very simple. But we’re learning checkers from scratch, while opening doors is easy — because we’re using a brain and body evolved over millions of years to be good at moving things with our hands. Robots are starting both checkers and opening doors from zero… and doors are clearly much harder.

But that challenge is part of what makes robotics so exciting. We have these really hard problems that we are starting to make good progress on, with new breakthroughs in learning all the time. And because no one has solved this kind of robotics, we’re still finding new ways to learn from ideas in fields like machine learning, computer vision, animation, psychology, experience design… and, yes, even puppetry.